CI for InstructLab¶

Unit tests¶

All unit tests currently live in the tests/ directory.

Functional tests¶

The functional test script can be found at scripts/functional-tests.sh

End-to-end (E2E) tests¶

The end-to-end test script can be found at scripts/basic-workflow-tests.sh.

This script takes arguments that control which features are used to allow

varying test coverage based on the resources available on a given test runner.

There is currently a default E2E

job

that runs automatically on all PRs and after commits merge to main or release

branches.

There are other E2E jobs that can be triggered manually on the actions page for the repository. These run on a variety of instance types and can be run at the discretion of repo maintainers.

E2E Test Coverage¶

You can specify the following flags to test various features of ilab with the

basic-workflow-tests.sh script - you can see examples of these being used within

the E2E job configuration files found

here.

Flag |

Feature |

|---|---|

|

Run model evaluation |

|

Run minimal configuration |

|

Use Mixtral model (4-bit quantized) |

|

Run “fullsize” training |

|

Run “fullsize” SDG |

|

Run with Granite model |

|

Run with vLLM for serving |

Trigger via GitHub Web UI¶

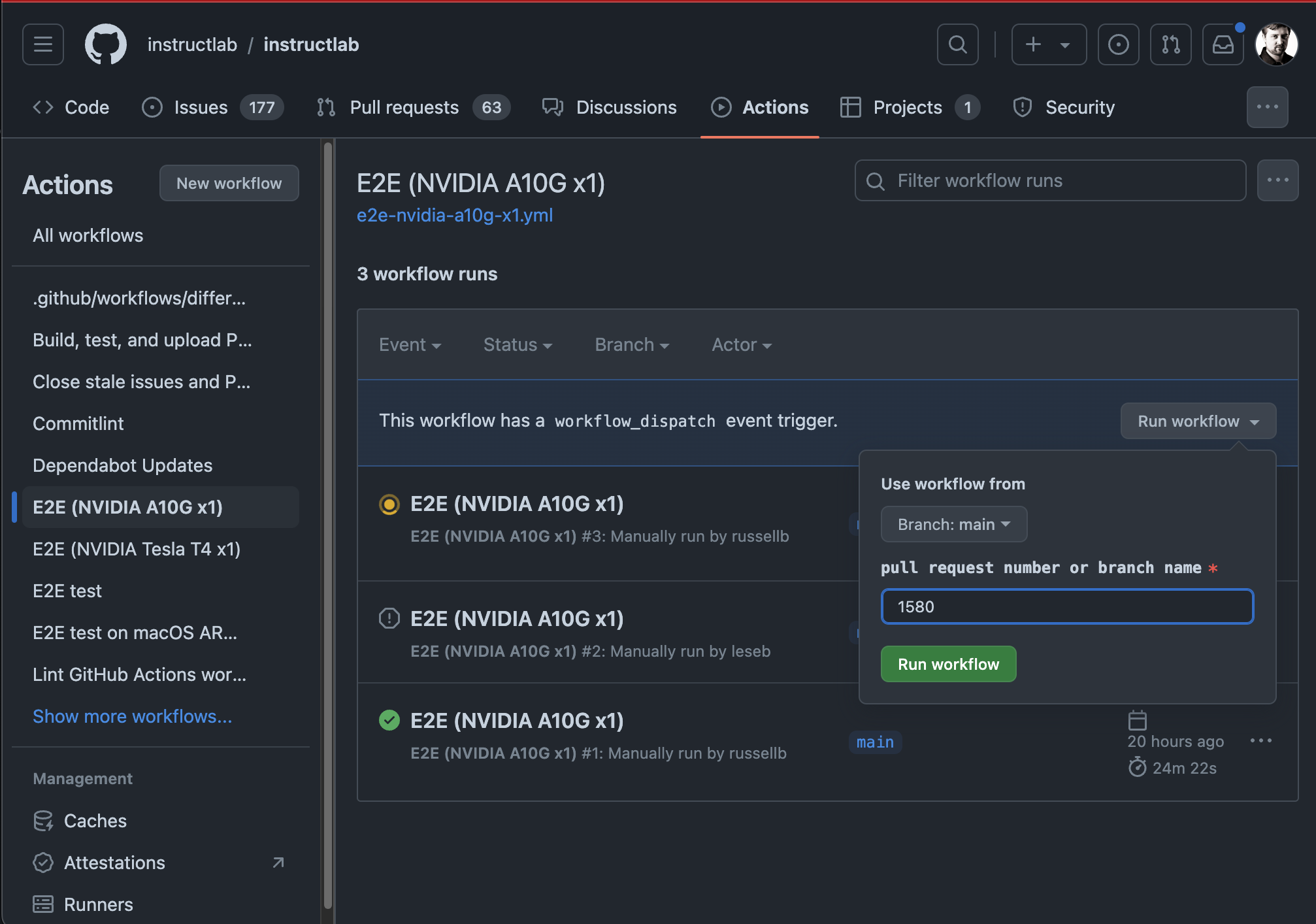

For the E2E jobs that are launched manually, they take an input field that specifies the PR number or git branch to run them against. If you run them against a PR, they will automatically post a comment to the PR when the tests begin and end so it’s easier for those involved in the PR to follow the results.

Visit the Actions tab.

Click on the E2E test workflow on the left side of the page.

Click on the

Run workflowbutton on the right side of the page.Enter a branch name or a PR number in the input field.

Click the green

Run workflowbutton.

Here is an example of using the GitHub Web UI to launch an E2E workflow:

Current GPU-enabled Runners¶

The project currently supports the usage of the following runners for the E2E jobs:

GitHub built-in GPU runner – referred to as

ubuntu-gpuin our workflow files Onlye2e.ymluses this runner.Ephemeral GitHub runners launched on demand on AWS. Most workflows work this way, granting us access to a wider variety of infrastructure at lower cost.

E2E Workflows¶

File |

T-Shirt Size |

Runner Host |

Instance Type |

GPU Type |

OS |

|---|---|---|---|---|---|

Small |

GitHub |

N/A |

1 x NVIDIA Tesla T4 w/ 16 GB VRAM |

Ubuntu |

|

Small |

AWS |

1 x NVIDIA Tesla T4 w/ 16 GB VRAM |

CentOS Stream 9 |

||

Medium |

AWS |

1 x NVIDIA A10G w/ 24 GB VRAM |

CentOS Stream 9 |

||

Large |

AWS |

4 x NVIDIA A10G w/ 24 GB VRAM (98 GB) |

CentOS Stream 9 |

E2E Test Matrix¶

Area |

Feature |

|

|

|

|

|---|---|---|---|---|---|

Serving |

llama-cpp |

✅ |

✅ |

✅ |

✅ (temporary) |

vllm |

⎯ |

⎯ |

⎯ |

❌ |

|

Generate |

simple |

✅ |

✅ |

✅ |

⎯ |

full |

⎯ |

⎯ |

⎯ |

✅ |

|

Training |

legacy+Linux |

⎯ |

⎯ |

✅ |

⎯ |

legacy+Linux+4-bit-quant |

✅ |

✅ |

⎯ |

⎯ |

|

training-lib |

⎯ |

⎯ |

✅(*1) |

❌ |

|

Eval |

eval |

⎯ |

⎯ |

✅(*2) |

❌️ |

Points of clarification (*):

The

training-libtesting is not testing using the output of the Generate step. https://github.com/instructlab/instructlab/issues/1655The

evaltesting is not evaluating the output of the Training step. https://github.com/instructlab/instructlab/issues/1540